1/1

A reminder that local LLMs called by open source libraries will one day be a central part of this open source stack, making AI use in applications cost less to the developer.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

A reminder that local LLMs called by open source libraries will one day be a central part of this open source stack, making AI use in applications cost less to the developer.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/12

How is that that one of the most powerful ideas in the entire history of technology is now under coordinated attack?

If I told you that one little software concept powers the website you're reading this on, the router in your house that connects you to the internet, the phone in your pocket and your TV streaming service, what would you think?

What if I said that the NASDAQ and New York stock exchanges where your retirement portfolio trades is powered by the same ideas? Or that those same concepts power 100% of the top 500 supercomputers on the planet doing everything from hunting for cures for cancer, to making rockets safer, to mapping the human brain?

Not only that, it also powers all the major clouds, from Amazon Web Services, to Google Cloud and Alibaba and more.

You'd probably think that was a good thing, right? Not just good, but absolutely critical to the functioning of modern life at every level. And you're be right.

That critical infrastructure is "open source" and it powers the world.

It's everywhere, an invisible bedrock beneath our entire digital ecosystem, underpinning all the applications we take for granted every day.

It seems impossible that something that important would be under attack.

But that's exactly what's happening right now. Or rather, it's happening again. It's not the first time.

In the early days of open source, a powerful group of proprietary software makers went to war against open source, looking to kill it off.

SCO Unix, a proprietary Unix goliath, tried to sue Linux into oblivion under the guise of copyright violations.

Microsoft's former CEO, Steve Ballmer, called open source cancer and communism and launched a massive anti-Linux marketing barrage. They failed and now open source is the most successful software in history, the foundation of 90% of the planet's software, found in 95% of all enterprises on the planet.

Even Microsoft now runs on open source with the majority of the Azure cloud powered by Linux and other open software like Kubernetes and thousands upon thousands of packages like Docker, Prometheus, machine learning frameworks like Pytorch, ONNX and Deepspeed, managed databases like Postgres and MySQL and more.

Imagine if they'd been successful in their early attacks on open source? They would have smashed their own future revenue through short-sightedness and total lack of vision.

And yet open source critics are like an ant infestation in your house. No matter how successful open source gets or how essential it is to critical infrastructure they just keep coming back over and over and over.

Today's critics of open source AI try to cite security concerns and tell us only a small group of companies can protect us from our enemies so we've got to close everything down again and lock it all up behind closed doors.

But if open source is such a security risk, why does "the US Army [have] the single largest installed base for RedHat Linux" and why do many systems in the US Navy nuclear submarine fleet run on Linux, "including [many of] their sonar systems"? Why is it allowed to power our stock markets and clouds and our seven top supercomputers that run our most top secret workloads?

But they aren't stopping with criticism there. They're pushing to strangle open source AI in its crib, with California bills like SB 1047 set to choke out American AI research and development while tangling up open weights AI in suffocating red tape, despite a massive groundswell of opposition from centrist and left Democrats, Republicans, hundreds of members of academia and more, the bill passed and now goes to the governor. Eight members of Congress called on the governor to veto the bill. Speaker Pelosi took the unprecedented step of openly opposing a bill in a state Assembly that is primarily Democrat.

And yet still the bill presses forward.

Why?

Do too many people just not understand what open source means to the modern world?

Do they just not see how critical it is to everything from our power grid to our national security systems and to our economies?

And the answer is simple:

They don't.

That's because open source is invisible.

It runs in the background. It quietly does its job without anyone realizing that it's there. It just works. It's often not the interface to software, it's the engine of software, so it's under the hood but not often the hood itself. It runs our severs and routers and websites and machine learning systems. It's hidden just beneath the surface.

The average person has no idea what powers their Instagram and Facebook and TikTok and Wikipedia and their email servers and their WhatsApp and Signal. They don't know it's making their phone work or that the trades of their retirement portfolio depend on it.

And that's a problem.

Because if people don't even know it exists, how can we defend it?

1/

2/12

** It's the End of the World as We Know It **

Even worse, that blindness to the essential software layer of the world is now putting tomorrow's tech stack under threat of being dominated by a small group of big closed source players, especially when it comes to AI.

Critics are fighting back against open source, terrified that if we all have access to powerful AI we'll face imaginary "catastrophic harms" and it must be stopped by any means necessary.

If they win, it will be a devastating loss for us all, because the revolutions in tech that made it so easy for people to get an education online, chat with our friends all around the globe, find information on any topic at the click of a button, stream movies, play games, find friendship and love are facing intense pressure by governments and activists the world over who want to clamp down on freedom and open access.

In private they know their laws are about crippling AI development in America. They call the bills “anti-AI” bills in their private talks. But in public they carefully frame it as “AI safety” to make it go down nice and easy with the wider public.

What made the web so great was an open, decentralized approach that let anyone setup a website and share it with the world and talk to anyone else without intermediaries. It let them share software and build on the software others gifted them to solve countless problems.

But with each passing day, it's looking more and more likely that we go from an open digital landscape to a world where civilian access to AI is severely locked down and restricted and censored and where powerful closed source AIs block and control what you can do at every step.

That's because a small group of about a dozen non-profits, financed by three billionaires, like convicted criminal and fraudster Sam Bankman-Fried and Estonian billionaire Jann Tallinn, who thinks we should outlaw advanced GPUs and enforce a strict crackdown to crush all strong AI development, have exploited this lack of awareness about the power and potential of open source, to create an AI panic campaign that's threatening tomorrow's tech stack and making it increasingly likely that tomorrow's internet is a world of locked doors and gated access to information.

This extremist group is pushing an information warfare campaign to terrify the public and make it think AI is dangerous, working to drive increasingly restrictive anti-AI laws onto the legislative agenda across the world, while driving a frenzied moral techno panic with increasingly unhinged claims about the end of the world.

They've completely failed to make any headway on stopping the worst abuses of AI, including autonomous weapons and mass surveillance with zero treaties signed and zero laws passed, and so they've turned their full force to restricting civilian access to AI and stopping the dreaded scourge of LLM chat bots, despite none of their actual fears materializing. They managed to get over 678 overlapping, conflicting and impossible to navigate bills pressing forward in 45 states and counting in the US alone, while utterly failing to make even the most basic headway on truly dangerous AI use cases like using it to spy on everyone at scale.

The irony of all this fear and panic is that these misguided folks are driving us right into the worst possible timeline of the future.

It's a world where your AI can't answer questions honestly because it's considered "harmful" (this kind of censorship always escalates because what's "harmful" is always defined by what people in power don't like), where information is gated instead of free, where open source models are killed off so university researchers can't work on medical segmentation and curing cancer (because budget conscious academics rely on open weights/open source models; they can fine tune them but can't afford to train their own) and where we have killer robots and drones but your personal AI is utterly hobbled and lobotomized.

It's a world we've got to fight to stop at all costs.

To understand why you just have to understand a little about the history of how we got to now.

How is that that one of the most powerful ideas in the entire history of technology is now under coordinated attack?

If I told you that one little software concept powers the website you're reading this on, the router in your house that connects you to the internet, the phone in your pocket and your TV streaming service, what would you think?

What if I said that the NASDAQ and New York stock exchanges where your retirement portfolio trades is powered by the same ideas? Or that those same concepts power 100% of the top 500 supercomputers on the planet doing everything from hunting for cures for cancer, to making rockets safer, to mapping the human brain?

Not only that, it also powers all the major clouds, from Amazon Web Services, to Google Cloud and Alibaba and more.

You'd probably think that was a good thing, right? Not just good, but absolutely critical to the functioning of modern life at every level. And you're be right.

That critical infrastructure is "open source" and it powers the world.

It's everywhere, an invisible bedrock beneath our entire digital ecosystem, underpinning all the applications we take for granted every day.

It seems impossible that something that important would be under attack.

But that's exactly what's happening right now. Or rather, it's happening again. It's not the first time.

In the early days of open source, a powerful group of proprietary software makers went to war against open source, looking to kill it off.

SCO Unix, a proprietary Unix goliath, tried to sue Linux into oblivion under the guise of copyright violations.

Microsoft's former CEO, Steve Ballmer, called open source cancer and communism and launched a massive anti-Linux marketing barrage. They failed and now open source is the most successful software in history, the foundation of 90% of the planet's software, found in 95% of all enterprises on the planet.

Even Microsoft now runs on open source with the majority of the Azure cloud powered by Linux and other open software like Kubernetes and thousands upon thousands of packages like Docker, Prometheus, machine learning frameworks like Pytorch, ONNX and Deepspeed, managed databases like Postgres and MySQL and more.

Imagine if they'd been successful in their early attacks on open source? They would have smashed their own future revenue through short-sightedness and total lack of vision.

And yet open source critics are like an ant infestation in your house. No matter how successful open source gets or how essential it is to critical infrastructure they just keep coming back over and over and over.

Today's critics of open source AI try to cite security concerns and tell us only a small group of companies can protect us from our enemies so we've got to close everything down again and lock it all up behind closed doors.

But if open source is such a security risk, why does "the US Army [have] the single largest installed base for RedHat Linux" and why do many systems in the US Navy nuclear submarine fleet run on Linux, "including [many of] their sonar systems"? Why is it allowed to power our stock markets and clouds and our seven top supercomputers that run our most top secret workloads?

But they aren't stopping with criticism there. They're pushing to strangle open source AI in its crib, with California bills like SB 1047 set to choke out American AI research and development while tangling up open weights AI in suffocating red tape, despite a massive groundswell of opposition from centrist and left Democrats, Republicans, hundreds of members of academia and more, the bill passed and now goes to the governor. Eight members of Congress called on the governor to veto the bill. Speaker Pelosi took the unprecedented step of openly opposing a bill in a state Assembly that is primarily Democrat.

And yet still the bill presses forward.

Why?

Do too many people just not understand what open source means to the modern world?

Do they just not see how critical it is to everything from our power grid to our national security systems and to our economies?

And the answer is simple:

They don't.

That's because open source is invisible.

It runs in the background. It quietly does its job without anyone realizing that it's there. It just works. It's often not the interface to software, it's the engine of software, so it's under the hood but not often the hood itself. It runs our severs and routers and websites and machine learning systems. It's hidden just beneath the surface.

The average person has no idea what powers their Instagram and Facebook and TikTok and Wikipedia and their email servers and their WhatsApp and Signal. They don't know it's making their phone work or that the trades of their retirement portfolio depend on it.

And that's a problem.

Because if people don't even know it exists, how can we defend it?

1/

2/12

** It's the End of the World as We Know It **

Even worse, that blindness to the essential software layer of the world is now putting tomorrow's tech stack under threat of being dominated by a small group of big closed source players, especially when it comes to AI.

Critics are fighting back against open source, terrified that if we all have access to powerful AI we'll face imaginary "catastrophic harms" and it must be stopped by any means necessary.

If they win, it will be a devastating loss for us all, because the revolutions in tech that made it so easy for people to get an education online, chat with our friends all around the globe, find information on any topic at the click of a button, stream movies, play games, find friendship and love are facing intense pressure by governments and activists the world over who want to clamp down on freedom and open access.

In private they know their laws are about crippling AI development in America. They call the bills “anti-AI” bills in their private talks. But in public they carefully frame it as “AI safety” to make it go down nice and easy with the wider public.

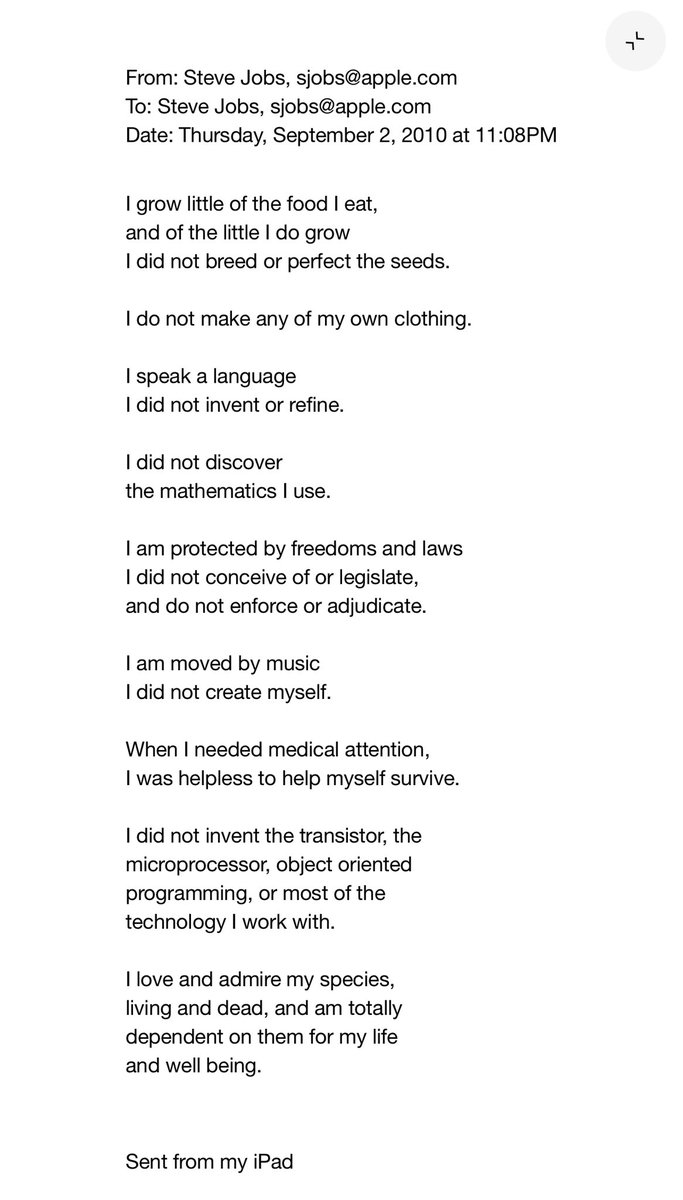

What made the web so great was an open, decentralized approach that let anyone setup a website and share it with the world and talk to anyone else without intermediaries. It let them share software and build on the software others gifted them to solve countless problems.

But with each passing day, it's looking more and more likely that we go from an open digital landscape to a world where civilian access to AI is severely locked down and restricted and censored and where powerful closed source AIs block and control what you can do at every step.

That's because a small group of about a dozen non-profits, financed by three billionaires, like convicted criminal and fraudster Sam Bankman-Fried and Estonian billionaire Jann Tallinn, who thinks we should outlaw advanced GPUs and enforce a strict crackdown to crush all strong AI development, have exploited this lack of awareness about the power and potential of open source, to create an AI panic campaign that's threatening tomorrow's tech stack and making it increasingly likely that tomorrow's internet is a world of locked doors and gated access to information.

This extremist group is pushing an information warfare campaign to terrify the public and make it think AI is dangerous, working to drive increasingly restrictive anti-AI laws onto the legislative agenda across the world, while driving a frenzied moral techno panic with increasingly unhinged claims about the end of the world.

They've completely failed to make any headway on stopping the worst abuses of AI, including autonomous weapons and mass surveillance with zero treaties signed and zero laws passed, and so they've turned their full force to restricting civilian access to AI and stopping the dreaded scourge of LLM chat bots, despite none of their actual fears materializing. They managed to get over 678 overlapping, conflicting and impossible to navigate bills pressing forward in 45 states and counting in the US alone, while utterly failing to make even the most basic headway on truly dangerous AI use cases like using it to spy on everyone at scale.

The irony of all this fear and panic is that these misguided folks are driving us right into the worst possible timeline of the future.

It's a world where your AI can't answer questions honestly because it's considered "harmful" (this kind of censorship always escalates because what's "harmful" is always defined by what people in power don't like), where information is gated instead of free, where open source models are killed off so university researchers can't work on medical segmentation and curing cancer (because budget conscious academics rely on open weights/open source models; they can fine tune them but can't afford to train their own) and where we have killer robots and drones but your personal AI is utterly hobbled and lobotomized.

It's a world we've got to fight to stop at all costs.

To understand why you just have to understand a little about the history of how we got to now.