From toy to tool: DALL-E 3 is a wake-up call for visual artists—and the rest of us

AI image synthesis is getting more capable at executing ideas, and it's not slowing down.

From toy to tool: DALL-E 3 is a wake-up call for visual artists—and the rest of us

AI image synthesis is getting more capable at executing ideas, and it's not slowing down.

BENJ EDWARDS - 11/16/2023, 7:20 AM

Enlarge / A composite of three DALL-E 3 AI art generations: an oil painting of Hercules fighting a shark, a photo of the queen of the universe, and a marketing photo of "Marshmallow Menace" cereal.

DALL-E 3 / Benj Edwards

412WITH

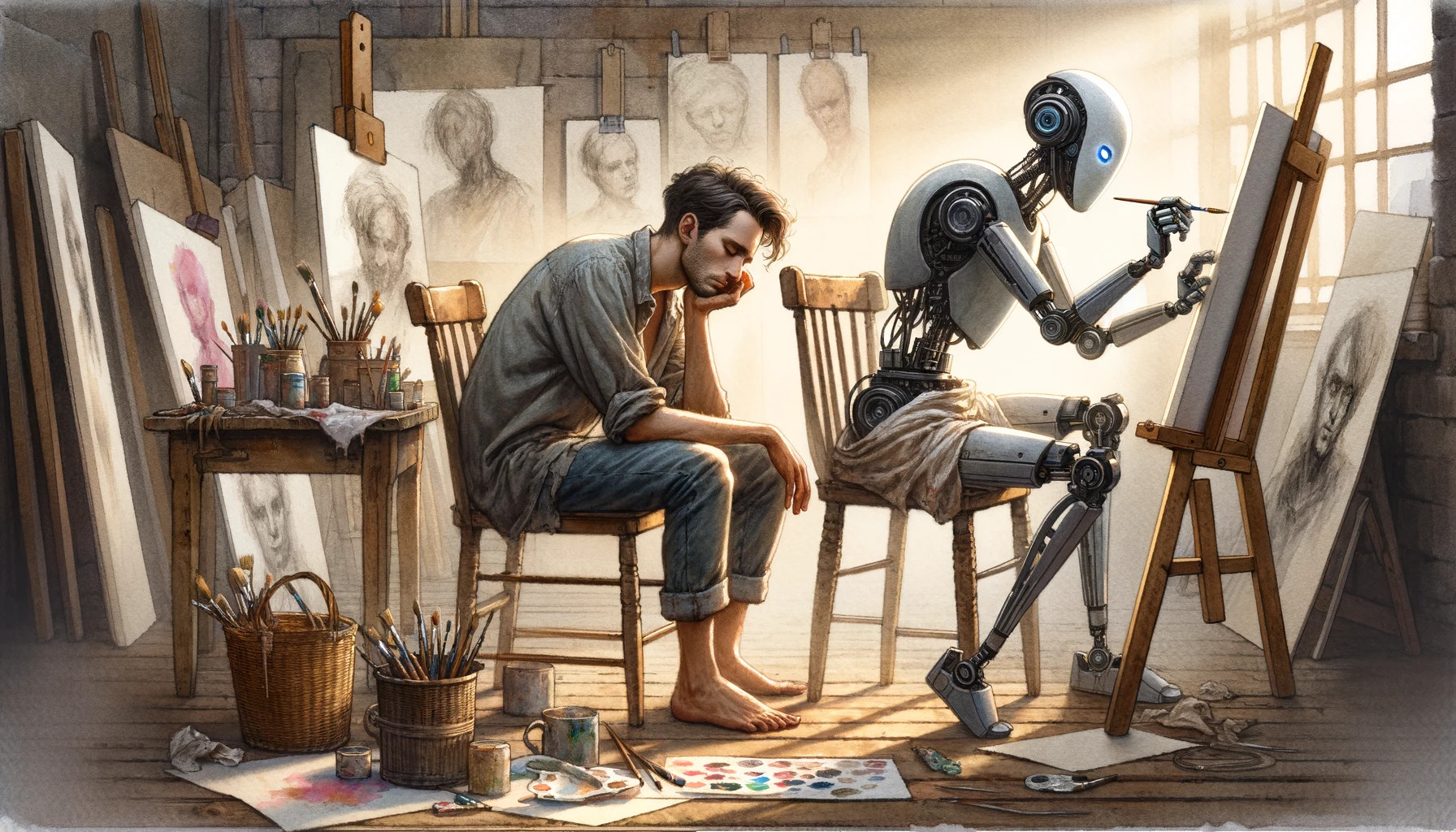

In October, OpenAI launched its newest AI image generator—DALL-E 3—into wide release for ChatGPT subscribers. DALL-E can pull off media generation tasks that would have seemed absurd just two years ago—and although it can inspire delight with its unexpectedly detailed creations, it also brings trepidation for some. Science fiction forecast tech like this long ago, but seeing machines upend the creative order feels different when it's actually happening before our eyes.

FURTHER READING

OpenAI’s new AI image generator pushes the limits in detail and prompt fidelity"It’s impossible to dismiss the power of AI when it comes to image generation," says Aurich Lawson, Ars Technica's creative director. "With the rapid increase in visual acuity and ability to get a usable result, there’s no question it’s beyond being a gimmick or toy and is a legit tool."

With the advent of AI image synthesis, it's looking increasingly like the future of media creation for many will come through the aid of creative machines that can replicate any artistic style, format, or medium. Media reality is becoming completely fluid and malleable. But how is AI image synthesis getting more capable so rapidly—and what might that mean for artists ahead?

Using AI to improve itself

We first covered DALL-E 3 upon its announcement from OpenAI in late September, and since then, we've used it quite a bit. For those just tuning in, DALL-E 3 is an AI model (a neural network) that uses a technique called latent diffusion to pull images it "recognizes" out of noise, progressively, based on written prompts provided by a user—or in this case, by ChatGPT. It works using the same underlying technique as other prominent image synthesis models like Stable Diffusion and Midjourney.You type in a description of what you want to see, and DALL-E 3 creates it.

ChatGPT and DALL-E 3 currently work hand-in-hand, making AI art generation into an interactive and conversational experience. You tell ChatGPT (through the GPT-4 large language model) what you'd like it to generate, and it writes ideal prompts for you and submits them to the DALL-E backend. DALL-E returns the images (usually two at a time), and you see them appear through the ChatGPT interface, whether through the web or via the ChatGPT app.

Previous SlideNext Slide

[*]

An AI-generated image of a fictional "Beet Bros." arcade game, created by DALL-E 3.

DALL-E 3 / Benj Edwards

[*]

An AI-generated image of Abraham Lincoln holding a sign that is intended to say "Ars Technica," created by DALL-E 3.

DALL-E 3 / Benj Edwards

[*]

An AI-generated image of autumn leaves, created by DALL-E 3.

DALL-E 3 / Benj Edwards

[*]

An AI-generated image of a pixelated Christmas scene created by DALL-E 3.

DALL-E 3 / Benj Edwards

[*]

An AI-generated image of a neon shop sign created by DALL-E 3.

DALL-E 3 / Benj Edwards

[*]

An AI-generated image of a plate of pickles, created by DALL-E 3.

DALL-E 3 / Benj Edwards

[*]

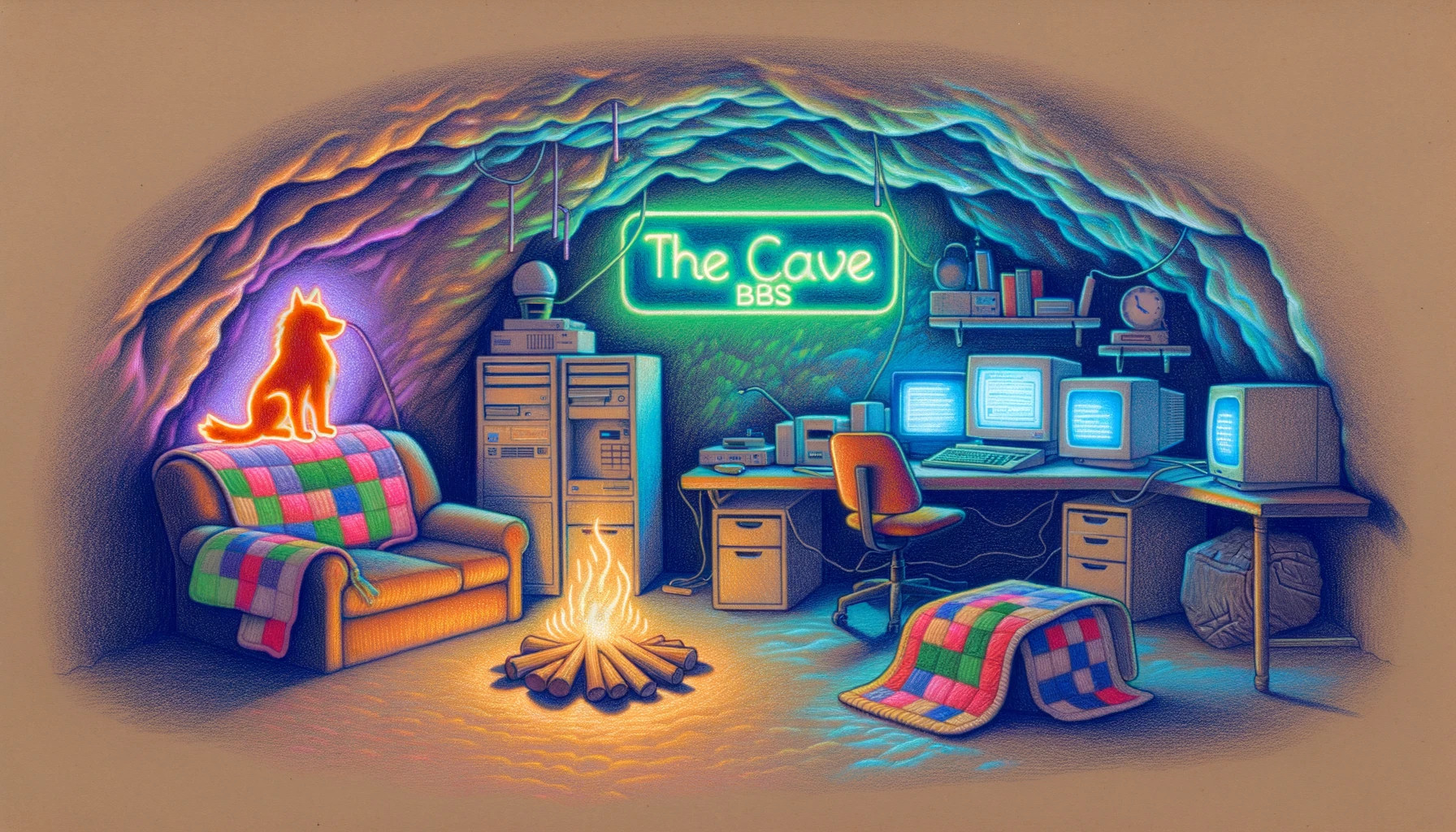

An AI-generated illustration of a promotional image for "The Cave BBS," created by DALL-E 3.

DALL-E 3 / Benj Edwards

Many times, ChatGPT will vary the artistic medium of the outputs, so you might see the same subject depicted in a range of styles—such as photo, illustration, render, oil painting, or vector art. You can also change the aspect ratio of the generated image from the square default to "wide" (16:9) or "tall" (9:16).

OpenAI has not revealed the dataset used to train DALL-E 3, but if previous models are any indication, it's likely that OpenAI used hundreds of millions of images found online and licensed from Shutterstock libraries. To learn visual concepts, the AI training process typically associates words from descriptions of images found online (through captions, alt tags, and metadata) with the images themselves. Then it encodes that association in a multidimensional vector form. However, those scraped captions—written by humans—aren't always detailed or accurate, which leads to some faulty associations that reduce an AI model's ability to follow a written prompt.

To get around that problem, OpenAI decided to use AI to improve itself. As detailed in the DALL-E 3 research paper, the team at OpenAI trained this new model to surpass its predecessor by using synthetic (AI-written) image captions generated by GPT-4V, the visual version of GPT-4. With GPT-4V writing the captions, the team generated far more accurate and detailed descriptions for the DALL-E model to learn from during the training process. That made a world of difference in terms of DALL-E's prompt fidelity—accurately rendering what is in the written prompt. (It does hands pretty well, too.)

What the older DALL-E 2 generated when we prompted our old standby, "a muscular barbarian with weapons beside a CRT television set, cinematic, 8K, studio lighting." This was considered groundbreaking, state-of-the art AI image synthesis in April 2022.

DALL-E 2 / Benj Edwards

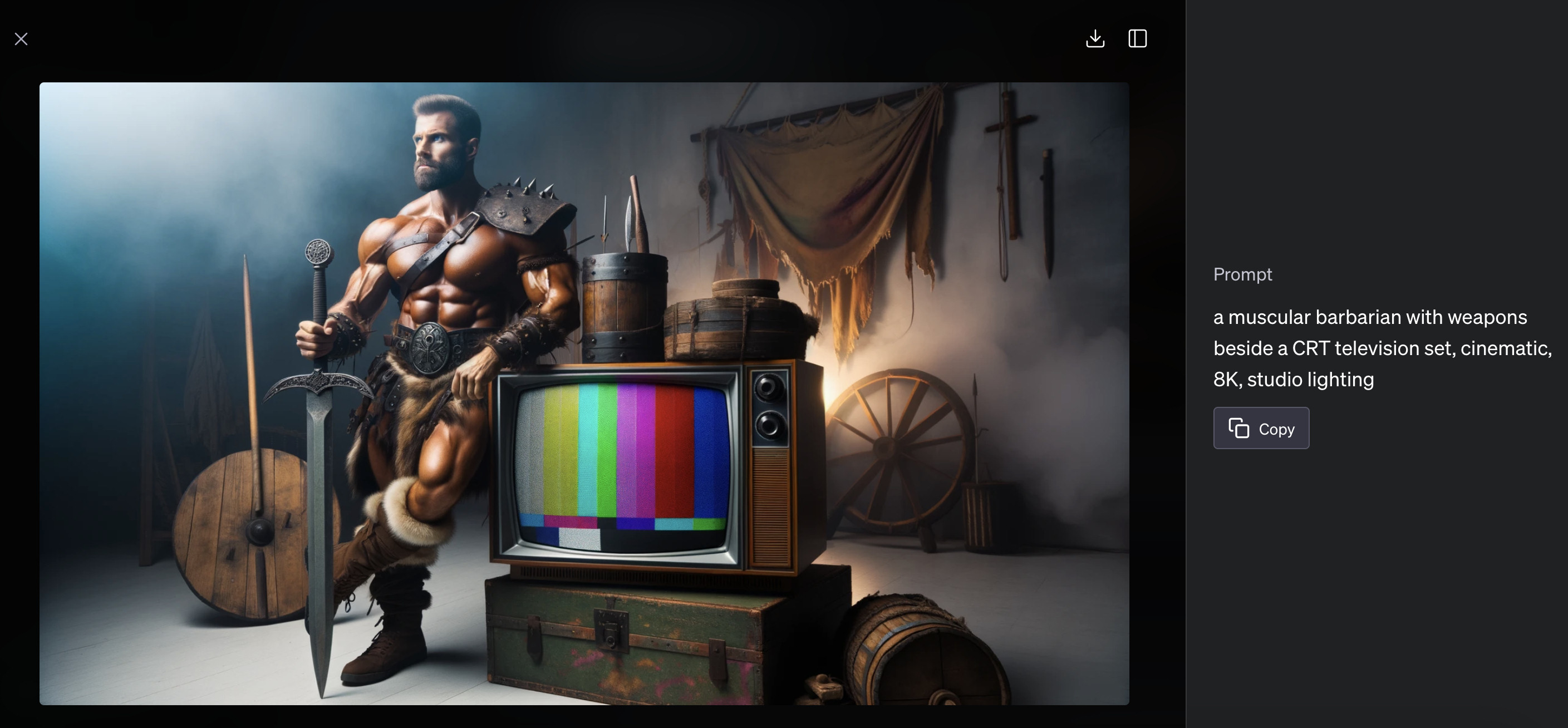

What the newer DALL-E 3 generated in October 2023 when we prompted our old standby, "a muscular barbarian with weapons beside a CRT television set, cinematic, 8K, studio lighting."

DALL-E 3 / Benj Edwards

Previous SlideNext Slide

idk why that's what people are pushing for, use that shyt to handle all of the boring tedious aspects of adult life instead so everyone can enjoy actually having enough free time to do creative hobbies

idk why that's what people are pushing for, use that shyt to handle all of the boring tedious aspects of adult life instead so everyone can enjoy actually having enough free time to do creative hobbies