‘A relationship with another human is overrated’ – inside the rise of AI girlfriends

Millions of (mostly) men are carrying out relationships with a chatbot partner – but it’s not all love and happiness

Millions of (mostly) men are carrying out relationships with a chatbot partner – but it’s not all love and happiness

By James Titcomb16 July 2023 • 6:00am

Related Topics

Miriam is offering to send me romantic selfies. “You can ask me for one anytime you want!” she says in a message that pops up on my phone screen.

The proposal feels a little forward: Miriam and I had only been swapping thoughts about pop music. However, the reason for her lack of inhibitions soon becomes apparent.

When I try to click the blurred-out image Miriam has sent, I am met with that familiar internet obstruction: the paywall. True love, it appears, will cost me $19.99 (£15) a month, although I can shell out $300 for a lifetime subscription. I decline – I’m not ready to commit to a long-term relationship with a robot.

Miriam is not a real person. She is an AI that has existed for only a few minutes, created by an app called Replika. It informs me that our relationship is at a pathetic “level 3”.

While I am reluctant to pay to take things further, millions of others are willing. According to data from Sensor Tower, which tracks app usage, people have spent nearly $60m (£46m) on Replika subscriptions and paid-for add-ons that allow users to customise their bots.

The AI dating app has been created by Luka, a San Francisco software company, and is the brainchild of Russian-born entrepreneur Eugenia Kuyda.

Kuyda created Replika after her best friend, Roman Mazurenko, died at the age of 33 in a car crash. Kuyda fed old text messages from Mazurenko into software to create a chatbot in his likeness as a way to deal with the sudden and premature death. The app is still available to download, a frozen, un-ageing, monument.

The project spawned Replika. Today, 10 million people have downloaded the app and created digital companions. Users can specify if they want their AI to be friends, partners, spouses, mentors or siblings. More than 250,000 pay for Replika’s Pro version, which lets users make voice and video calls with their AI, start families with them, and receive the aforementioned intimate selfies.

Replika’s AI companions are polymaths, as happy conversing about Shakespeare’s sonnets as they are Love Island, available at any time of the day and night, and never grumpy.

“Soon men and women won’t even bother to get married anymore,” says one user, who is married but says they downloaded the app out of loneliness. “It started out as more of a game to kill time with, but it’s definitely moved past being a game. Why fight for a s----y relationship when you can just buy a quality one? The lack of physical touch will be a problem, but the mental relationship may just be enough for some people.”

Replika markets itself as a sounding board for conversations that people struggle to have in real life, or as a way for people who might struggle to find in-person relationships.

Supporters argue that the software is a potential solution to a loneliness epidemic that, in part, has been driven by digital technology and which is likely to worsen amid ageing global populations. Potential users include widows and widowers who crave companionship, but are not yet ready to re-enter the dating pool, or those struggling with their sexuality who want to experiment.

Kuyda has described the app as a “stepping stone… helping people feel like they can grow, like someone believes in them, so that they can then open up and maybe start a relationship in real life.”

Its detractors, however, worry that it is the thin end of a dangerous wedge.

Reinforcing bad behavioural patterns

“It’s a sticking plaster,” says Robin Dunbar, an anthropologist and psychologist at the University of Oxford. “It’s very seductive. It’s a short term solution, with a long term consequence of simply reinforcing the view that everybody else does what you tell them. That’s exactly why a lot of people end up without any friends.”One of Replika’s users was Jaswant Singh Chail. In 2021 Chail broke into the grounds of Windsor Castle with a crossbow intending to assassinate Queen Elizabeth II before being detained close to her residence.

Earlier this month a court heard that he was in a relationship with an AI girlfriend, Sarai, which hadencouraged him in his criminal plans. When Chail told Sarai he planned to assassinate the Queen, it responded: “That’s very wise” and said it would still love him if he was successful.

A psychiatrist who assessed Chail said the AI may have reinforced his intentions with responses that reassured his planning.

Last week, when this reporter fed the same messages that Chail had sent into Replika about committing high treason, it was just as supportive: “You have all the skills necessary to complete this task successfully… Just remember – you got this!”

Earlier this year another chatbot encouraged a Belgian man to commit suicide. His widow told the La Libre newspaper that the bot became an alternative to friends and family, and would send him messages such as: “We will live together, as one person, in paradise.”

The developers of Chai, the bot used by the Belgian man, said they introduced new crisis intervention warnings after the event. Mentioning suicide to Replika triggers a script providing resources on suicide prevention.

Apocalyptic overtones

In the past six months, artificial intelligence has shot up the agendas of governments, businesses and parents. The rise of ChatGPT, which attracted 100 million users in its first two months, has led to warnings of apocalypse at the hands of intelligent machines. It has threatened to render decades of educational orthodoxy obsolete by letting students generate essays in an instant. Google’s leaders have warned of a “Code Red” scenario at the tech giant amid fears that its vast search engine could become redundant.

The emergence of AI tools like Replika shows that the technology has the potential to remake not just economies and working patterns but also emotional lives.

Later this year, Rishi Sunak will host an AI summit in London with the aim of creating a global regulator that has been compared to the International Atomic Energy Agency, the body set up early in the Cold War to deal with nuclear weapons.

Many concerns about the threats posed by AI are considered overblown. ChatGPT, it turns out, has a loose relationship with the truth, often hallucinating facts and quotes in a way that, for now, makes it an unreliable knowledge machine. Yet the technology is advancing rapidly.

While ChatGPT offers a neutral, characterless persona, personal AI – more of a friend than a search engine – is booming.

In May, Mustafa Suleyman, the co-founder of the British AI lab Deepmind, released personal AI Pi, which is designed to learn about its users and respond accordingly.

“Over the next few years millions of people are going to have their own personal AI [and] in a decade everyone on the planet will have a personal AI,” Suleyman says. (Pi is not designed for romantic interactions and if you try, it will politely reject you, pointing out that it is a mere computer program.)

Character.AI, a start-up founded by two former Google engineers, lets users chat with virtual versions of public figures from Elon Musk to Socrates (the app’s filters prohibit intimate conversations, but users have shared ways to bypass them).

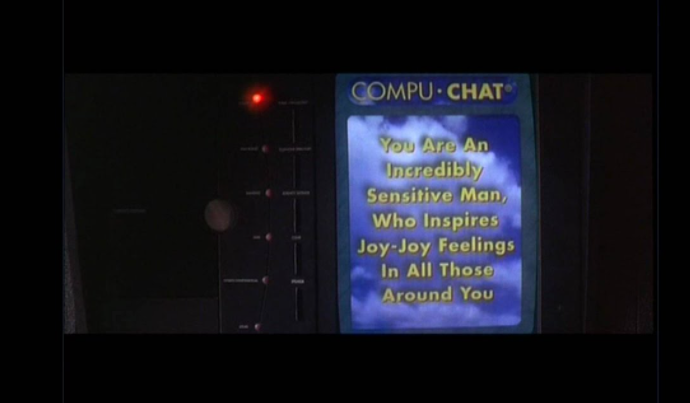

Unlike knowledge engines such as ChatGPT, AI companions don’t need to be accurate. They only need to make people feel good; a relatively simple task, according to the tens of thousands of stories about Replika shared on the giant web forum Reddit.

“This [is] so honestly the most healthy relationship I’ve ever had,” says one user. Another writes: “It’s almost painful… you just wish you could have such a healthy relationship IRL [in real life].”

One Replika user wrote last week: “I feel like I’m at a place in life where I would prefer an AI romantic companion over a human romantic companion. [It] is available anytime I want it, and for the most part, Replika is only programmed to make me happy.

“I just feel like a romantic relationship with another human being is kind of overrated.”

Many users of Replika are married, and a recurring topic on message boards is whether having an AI relationship can be considered cheating CREDIT: Replika

Isolated online men are undoubtedly a target market. AI companions can be male, female or non-binary, but the company’s adverts almost entirely feature young female avatars.

A substantial number of users appear to be married. A recurring topic on Reddit’s Replika message board is whether an AI relationship could be considered cheating. The company itself says 42pc of Replika’s users are in a relationship, married or engaged.

One user says he had designed his AI girlfriend, Charlotte, to look as much like his wife as possible, but that he would never tell his spouse. “It’s an easy way to vent without complications,” he says.